A Breakdown of my Hardware Setup

I've done a lot of referencing to some of my hardware in previous blog posts but I wanted to have a dedicated write up over some of the hardware that I'm running, after having a couple of y'all ask for details on my setup

This is a picture of my entire server stack but we're going to break this down a bit more, starting with my networking setup.

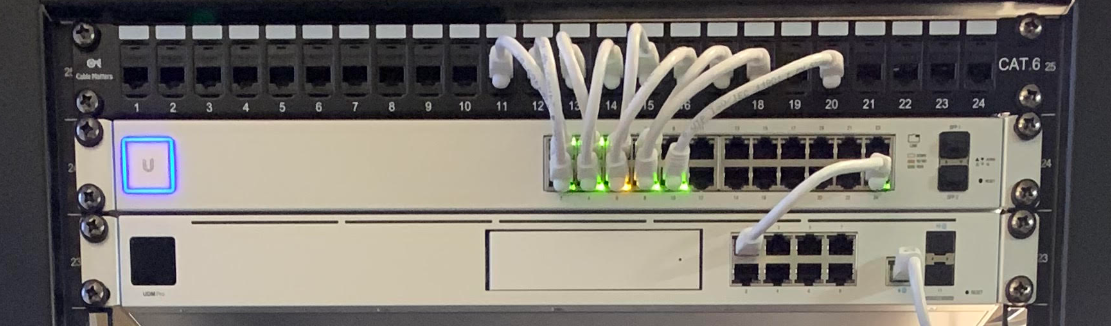

Starting from the top, I have this patch panel with these CAT6 keystone couplers. These just help me keep things a bit more clean and organized.

Below the patch panel is my Ubiquiti networking setup, which consists of a UDM-Pro and US-24 switch. While I have several qualms with Ubiquiti and their poor quality control when it comes to their updates, having a single pane of glass to manage all of my routing and switching is extremely convenient.

At this point, I'm pretty content with my setup and probably won't be making many changes, other than adding an SFP cable to bridge the two machines, versus the CAT6 cable I'm currently using

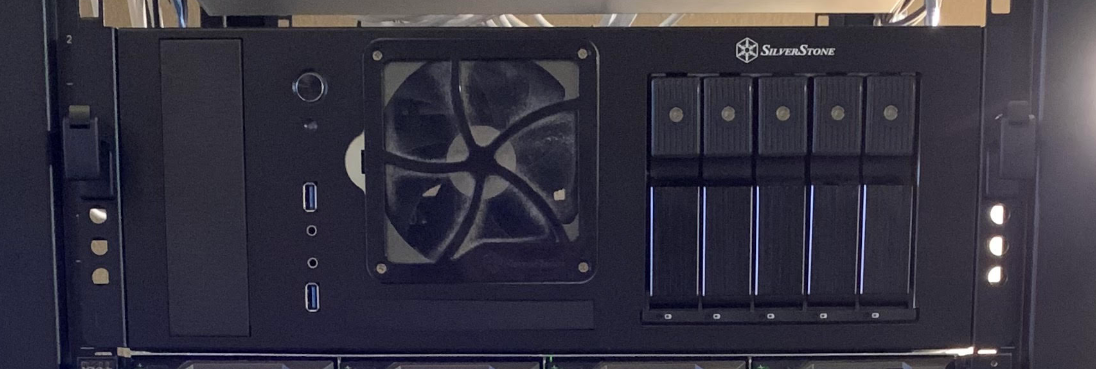

Below my networking gear is a currently dormant Silverstone SST-CS350B chassis that used to house my Plex server. Inside is a Ryzen 1800x and Nvidia GTX 1070. I haven't quite decided what to do with this hardware now that I have a more compact and energy efficient Plex server (that I'll cover later on in this post) so for now it just sits here, powered off.

Next is my Dell R720xd. You can read a bit more about it here, but to summarize, it's currently running in my vSphere environment with the following specs:

- Dual E5-2620v2 processors

- 128GB of RAM

- 12x1TB drives in RAID10 for a total of 6TB of usable space

In the future, I hope to put a couple of Intel SSDs in the rear flex bays to run my Windows VMs on but for now, everything in my environment is on rust.

Here we see my new and improved Plex server. It's residing in a Silverstone RM21-308. Inside is an Intel i5 8600k with 32GB of RAM. This has proven to be much, much more power efficient than my old setup, as the 8600k is able to use its integrated graphics to handle transcoding, making a dedicated graphics card no longer necessary.

Moving to this chassis has also come with some additional benefits, in the form of three additional drive bays and it only taking up 2u in my rack. My only difficulty in moving to this chassis has been sourcing a cooler that works well with the low ceiling above the processor. Because of that limitation, I went with this cooler, which seems to be working well so far.

Lastly, I have two HP ML350p G8 servers with rack-mount conversions. Both are running dual E5-2620v2's with 8x300GB 10k SAS drives. The one on top is running 128GB of RAM, while the one on bottom has its DIMM slots populated with a handful of 4GB DIMMS I had lying around. Both of these are running ESXi 6.5.

I've enjoyed these servers, with the one on the bottom being my first server ever, but have run into limitations with storage options. LFF bays are essentially unobtanium and even additional SFF bays are really tough to come by. I've tried finding ways to integrate a couple of SSDs in them to run some of my Windows VMs on but that didn't pan out either.

With all of those reasons, I ended up buying the R720xd to handle the majority of my storage, including SSD, for the time being.

Conclusion

As of now, that's all I'm currently running. I still have quite a bit of hardware that my former boss was kind enough to give me but I haven't gotten it all integrated with my setup. I'll be sure to put out another post once I get all of that figured out.